Buzzfood: Shazam for food!

The name says it all. This is an iOS application which detects the name of a cuisine in Real-time, very much like the popular Shazam application but instead of the songs, its for Food. Users can either select a picture from their gallery or they can capture it through the live feed of the camera. The idea came to me when I was watching the HBO's comedy TV series Silicon Valley. In the show, Jin Yang creates an application that can only detect if a food is Hotdog or not. So I decided to create one that detects all the foods.

Methodology

The implementation started by searching for opensource pre-annotated datasets or pre-trained model. I couldn't find any good ones. Therefore, I decided to create my own and contribute it to the opensource community. I had a couple of options for creating and training our model: PyTorch, YOLO, TensorFlow, etc. I was looking to use the model in an iOS application. So that filtered out the options leaving me with tiny YOLO, TensorFlow lite, and CreateML. CreateML was an obvious choice.

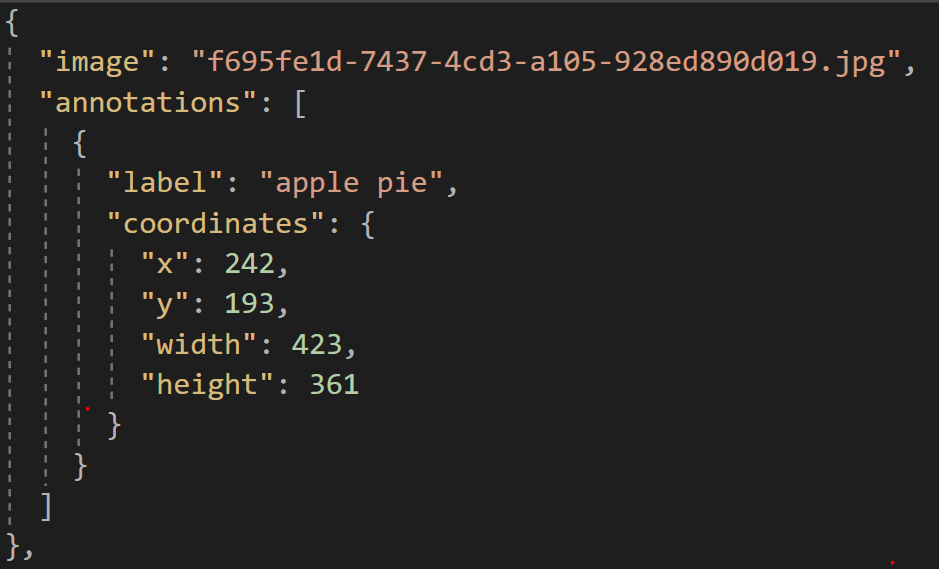

For the custom dataset, I chose IBM's cloud annotations web tool. CreateML uses the following format for annotating its datasets and building:

Alright, lemme explain this JSON a lil bit:

=> "image": This tag contains the name of the image

=> "annotations": is a list containing all the following sub-fields:

- "label": Contains the category of the food detected. In this case, its an image of an apple pie

- "coordinates": is a dictionary containing:

> "x": the x-coordinate of where the bounding box begins

> "y": the y-coordinate of where the bounding box begins

> "width": the width of the bounding box

> "height": the height of the bounding box

This dataset contained 1500+ images of 25+ subclasses including: burger, pizza, bagel, rice, apple pie, doughnut, taco, calamari, sushi, etc. Using this dataset the convolutional neural network model was trained over 14000 iterations that took almost 4 days on a Macbook Pro early 2015 model(still pretty awesome). Fortunately, the results were really astonishing.

While the model was training, I built up the UI for the iOS application. The application contains 3 views(like snapchat): Main screen, food detection on images from gallery and Real-time food detection. For the buttons, I created custom neumorphic buttons. The model is imported using CoreML and for every detections, according to the highest confidence level, detected food name is displayed. If a food is detected in the image, the background turns green with an affirming "ding" sound. If no food is detected, the background turn red with a dissapointing "dong" sound.

Demo

The finished application looks something like this:

If you found this project cool, please join me on my twitch live coding the entire project.

Thanks for reading!

0 comments:

Post a Comment