Introduction

With the escalation of Deep Learning and Computer Vision, comes forth the ability to develop better autonomous vehicles. One such potential vehicular means is Drones. It's application ranges from surveillance, delivery, precision agriculture, weather forecasting, etc. This project has one such application.

Embedded with Python-based Face Recognition and Tracking, and Convolutional Neural Network, the application gives autonomous flight abilities to the Drone. There are two modes: Manual and Autonomous mode. Additional Features included are Normal, Sports, and Berserk mode for Faster Flight Speeds, Flips(Forward, Backward, Left and Right), Patrolling and Live Video Streaming, and autonomous Snapshots.

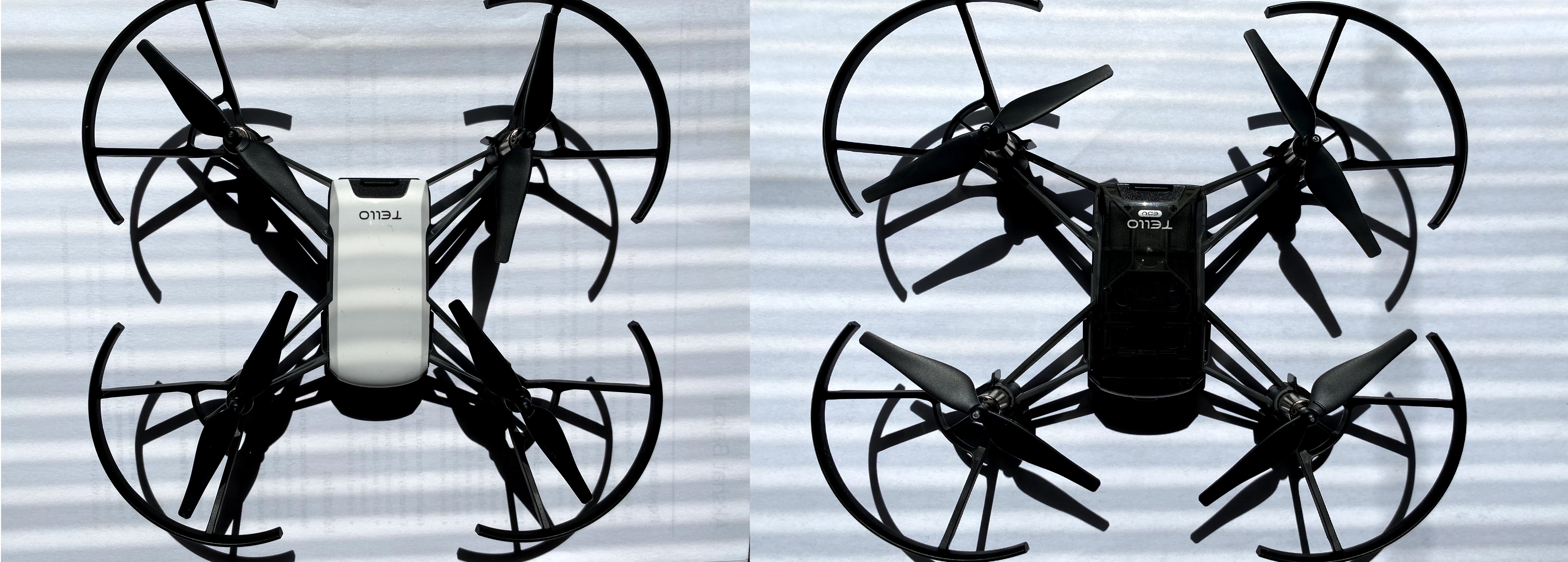

The Drones supported for my project are DJI Tello and Tello Edu. Both of these drones have several fascinating features which makes it the perfect candidate for the drone. Such as:

- =>Affordability

- =>Relatively Smaller Size

- =>Programmable with Python and Swift

- =>Embedded Camera

- =>Intel Processor for stable flight and turbulence reduction

|

| fig 2. DJI Tello (on the right) and DJI Tello Edu (on the left) |

The Softwares Tools and Technologies used in this project are:

- Programming and Markup Languages: Python, HTML, CSS,

- IDLE and Frameworks: Flask, Ajax, Anaconda Library, Jupyter Notebook, PyCharm

- Python Libraries: OpenCV 4, numpy, Haar cascade-xml file for Face Recognition, FFMPEG, logging, socket, Threading, sys, time, contextlib

Methodology

I started by creating a program that streams videos from the Drone to my laptop.

|

| fig 3. Network Backend of the Application |

There are two basic network streams: A double-ended Full Duplex connection for sending and receiving commands between the laptop and the drone, and a half-duplex one-way connection from the drone to the laptop for video streaming. After establishing a stable enough connection with live streaming functionality, I used OpenCV on the video streamed from the drone for Facial Recognition. The next step was to figure out how to make the drone flight synchronous with my face's movement. I had to use Co-ordinate Geometry for achieving this.

| fig 4. Coordinate Geometry behind the Follow Algorithm |

The Follow Algorithm

- The pixel layout of our screen starts with (0,0) and goes to the screen resolution of the display((1080,720) for HD or (3840,2160) for 4k). We make use of this to form an x-y pixel graph of the video.

- In this graph, I started by drawing out a Rectangle fixed to the frame of the screen. Then, I placed a fixed point in the centroid of this rectangle. This point will be a static point of reference for other moving points on the screen.

- The second point, which would be dynamic in nature, would be the centroid of the rectangle containing all the faces detected in the video.

- The locations of these points, relative to each other, would determine the command to be sent to the Drone. There are two basic commands that we need to send to the drone: Direction and speed of the movement.

- The Direction in which the drone will be flown is determined by the orientation of the dynamic point (centroid of the Rectangle enclosing the detected faces), relative to the orientation of the static point(centroid of the static Rectangle enclosing the frame).

- The Speed of the drone will be determined by and will be directly proportional to the distance between these two points.

The result looked like this:

| fig 5. Implementation of the Algorithm using Python |

I then set up a local web server using Flask to provide a UI for the project. At this point, the backend was pretty much ready. Next comes creating a cool UI/UX for my application. For which, I used HTML and CSS.

Results

This video shows me moving around a small room and the drone following me.

Test 1:

Test 2:

The drone was able to track my face and follow me well. You can clearly see the latency but it could be overcome with better hardware.

Future Work

Although the Drone-Follow algorithm works well, there are somethings that could really improve the working of the application:

- Integrating C++ to improve latency and response time, bypassing the comparatively slow Python I/O. I've been seeing significantly improved response time using Intel's distribution of Python. I will consider using that too...

- The DJI camera is still good enough to track the faces but there's always a scope of introducing more functionalities such as more range, better resolution, zooming capabilities, and depth sensing.

- Upgrading the WiFi card in the drone would certainly improve the transmission latency, especially in local networks.

- I'm curious to see the results when we make use of other Computer Vision libraries such as DLib or Yolo.

- Improved battery life for the Drone.

Finally, you can find my project in my Github repo here.

0 comments:

Post a Comment